August Evrard, PhD, Arthur F. Thurnau Professor of Physics and Astronomy

Cait Holman, PhD, Associate Director of Research & Development

@chcholman

Seth Saperstein, College of Literature, Science, and the Arts Senior and Data Science Fellow

Kyle Schulz, Data Scientist

@kwschulz_um

It’s a new semester! Students, fresh off a week of celebrations and newly-formed friendships, are starting to settle down into the academic grind. Just a few stories below the Center for Academic Innovation’s offices in the Harlan Hatcher Graduate Library is the reference room, suddenly sprung back to life with studying students. The first exams, now just a few pages away in brand new M-Planners, stand menacingly. The hushed chatter turns toward the magic word, “studying.”

“Studying” — it’s a term loosely defined as devoting time and attention to learning. While the concept itself is trivial, the question becomes how? Students are rarely taught how or when to study, and this is made increasingly complicated by the abundance of study resources provided by professors, many of which — while useful — are not an accurate representation of the assessments students will actually take.

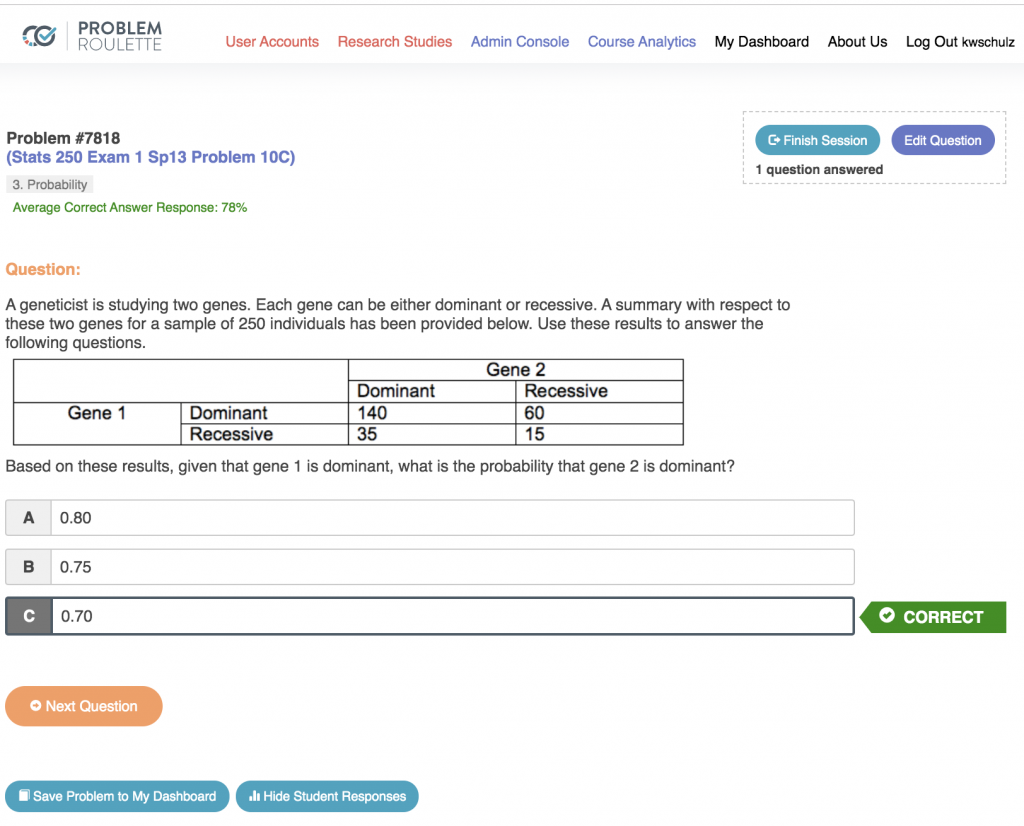

In 2011, August Evrard, PhD, Arthur F. Thurnau Professor of Physics and Astronomy, was motivated by the realization that study resources (like past exams) were not equally accessible to his introductory physics students; thus, Problem Roulette was born. Problem Roulette is a “points-free practice zone” where all students can access previous exam questions (you can hear the full origin story behind Problem Roulette from Professor Evrard in this podcast). Produced in partnership with the Center for Academic Innovation, the platform supports 12 courses across seven departments and includes features like group-study, exam mode, and Name that Topic mode to facilitate different study methods.

Since 2017, more than 10,000 University of Michigan students have attempted more than two million questions. The tool hosts a wealth of student learning data, including unique patterns and signatures of students’ study behaviors, and the outcomes they earned. This summer, our Research & Development team wanted to begin assessing the impact of Problem Roulette on students’ exam performance with the goals of improving the tool and advising students on how, and when, it’s most helpful to use.

One issue we run into often when analyzing our tools’ effectiveness lies in the way our samples are obtained. Because Problem Roulette is an elective study resource, we cannot be certain that the two populations (users and non-users) don’t have some fundamental differences unrelated to Problem Roulette that may result in differences in grade outcomes. We know from historical learning analytics studies that the best metric to predict student success is their grade point average — how a student performed in past courses is likely to predict a grade they may receive in future courses. For the purposes of our work, we calculated a student’s cumulative grade point average following the conclusion of the semester when they used Problem Roulette, but we calculated it without the grade they earned in the class that used Problem Roulette. We call this “Grade Point Average – Outside” – or GPAO. By including GPAO as an additional factor in our model, we were able to compare how students with similar academic histories, and who we generally expected to perform similarly, end up performing when they do (or don’t) use Problem Roulette.

So what did we learn?

We analyzed data from ten courses that used Problem Roulette in the last year, and found that in half of those courses, there existed significant positive performance differences for students who used Problem Roulette, with users achieving between 0.1 and 0.3 letter grades higher than non-users. The initial results are reassuring, and can help us in the iteration process for future version releases. We’re asking ourselves, “What is it about these five courses that have led to significant improvements?” We also hope to identify patterns in the behaviors of students who use Problem Roulette to attempt to improve their performance. As Problem Roulette continues to grow, we hope students will evolve and learn with it.