Jeremy Nelson, Director of XR Initiative

In this week’s MiXR Studios podcast, we talk with Anıl Çamcı, assistant professor of performing arts technology in the University of Michigan’s School of Music, Theatre & Dance. Anıl teaches courses in immersive media to explore audio in virtual spaces. His work focuses on the intersection of virtual reality, human-computer interaction, and spatial audio. Anıl has been working closely with the XR Initiative to help share his expertise with other faculty on how to teach with XR.

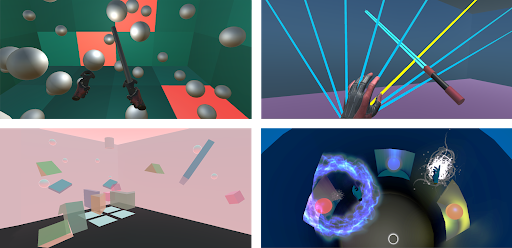

His work has been rooted in sound and immersive sound design. He utilized the AlloSphere at UC Santa Barbara and continued his research at the University of Illinois Chicago’s electronic visualization lab. Anıl came to U-M in 2017 to research and teach musical interaction design including his course Immersive Media to teach students about XR technology by using the technology. Students in this course are taught fundamentals of VR and audio design and then create their own VR experiences in tools such as Unity. The work that students produce out of this course is amazing. Over the course of a semester, students learn to program and create VR soundscapes and environments and are at the forefront of shaping the future of VR content creation.

Anıl was the recipient of our inaugural XR Innovation Fund awards in 2020 for his project to create cross-platform tools for immersive audio design. This project will build on his work with Inviso, a web-based spatial audio creation tool, to extend this toolkit into an SDK or plugin for platforms such as Unity and Unreal Engine. This work will be important to bring more sound design and spatial audio into XR spaces and allow content creators to develop richer experiences faster and with more fidelity.

Anıl and I spend a good amount of time talking about the design and development challenges of the current iteration of XR tools and the importance for an organization like U-M to be at the forefront of new devices and systems. We explore ideas for future iterations of development that are more aligned with other creative tools for film and audio production that offer immediate action perception feedback loops. XR technology is changing so rapidly each year with major changes to hardware in terms of physical components and functionality that it is important for the University to continue to invest and ensure faculty have access to newer technology to continue to push the limits of what can be taught and learned. We conclude our conversation by talking about the need for strong partnerships with XR corporations and necessity to create the next generation of XR content creators.

Anıl and I spend a good amount of time talking about the design and development challenges of the current iteration of XR tools and the importance for an organization like U-M to be at the forefront of new devices and systems. We explore ideas for future iterations of development that are more aligned with other creative tools for film and audio production that offer immediate action perception feedback loops. XR technology is changing so rapidly each year with major changes to hardware in terms of physical components and functionality that it is important for the University to continue to invest and ensure faculty have access to newer technology to continue to push the limits of what can be taught and learned. We conclude our conversation by talking about the need for strong partnerships with XR corporations and necessity to create the next generation of XR content creators.

I enjoyed talking with assistant professor Çamcı and learning more about his path to XR and how he will shape the future of VR with his important work in spatial audio. Please share with us what you would like to learn more about in the XR space at [email protected].

Subscribe on Apple Podcast | Spotify

Transcript: MiXR Studios, Episode 17

Jeremy Nelson (00:05):

Hello, I’m Jeremy Nelson. And today we are talking with Anıl Çamcı, who is an assistant professor of music at the university of Michigan School of Music, Theatre, & Dance. We are talking about his work in immersive audio and the VR design course, he teaches coming up next in our MiXR podcast. Welcome. And thank you for joining us today. Thanks for having me. Yeah. We’re really excited to talk with you and learn more about all the cool work you’re doing. You know, every time I talk to other folks and tell them we’re working with someone in our school of music, they just get super excited and love to learn more about that. So, yeah. How did, how did you get into this? Like how did you start with VR and think about this from, from your perspective?

Anıl Çamcı (00:57):

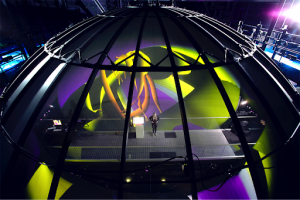

Yeah, yeah, sure. Um, so my work deals with VR and XR in general in a relatively multimodal context. Um, but I do come from an audio and music background and I mean, as you know, sound is an inherently immersive phenomenon, right? You don’t need to make it 3D. It takes up volume, it surrounds you right away. And that disposition of sound has been, um, acknowledged in audio research very early on, right? The moment we figured out how to do sound reproduction, one of the first questions was like, how do we simulate other environments? And in that sense, like my work has been involved in immersive systems for quite awhile. Um, but it became more explicit. Um, during my studies at the UCSB media arts and technology department, where we have the AlloSphere, which is this immersive facility, it’s this giant sphere, uh, built into a three story building.

Anıl Çamcı (02:01):

It’s got like full, um, surround projection and sound. And then from there, um, I started a Sonic arts graduate program at the Istanbul technical university where part of the curriculum was heavily involved in interactive and immersive systems and spatial audio. And I also, um, taught workshops and summer schools. One example that comes to mind is the media architecture and spatial systems, summer school. We organized where we combined robotic fabrication with VR. So the participants designed these human scale structures with a robotic arm, and then they created interactive versions of that with VR. And this was with the developer kit back in the day with leap motion, strapped onto them, everything programmed and yeah, everything programmed in like open frameworks. So very like wild West.

Jeremy Nelson (03:01):

Yeah. You were in the beginning

Anıl Çamcı (03:04):

Modern, modern VR. It was ugly, but you know, we made it through, there were some really interesting work that come out of those sort of projects. And then I was a research associate at the university of Illinois at Chicago’s electronic visualization lab, which is a, um, sort of a pioneering institution in VR. That’s where the CAVE system was invented. So yeah, I got to work there for about two years doing both research and instruction, um, at the intersection of VR, human computer interaction, and audio, I got to build both hardware and software systems for, um, VR and immersive systems. And, um, about three years ago, I came to the university of Michigan sort of continuing that line of research here. I’m very involved in sort of musical interaction design for VR and designing these creativity support tools that lowers the barrier of entry for content creators to work in this domain.

Anıl Çamcı (04:09):

I do a lot of like, um, service and leadership initiatives, both on campus and internationally. And, um, in my second semester here, I started teaching a course called Immersive Media, which is a course that not only utilizes XR technology, but it’s about XR technology and its applications to performing arts. Um, students get to like get a lot of experience in the diverse range of XR technologies, like game engines, AR tools, VR systems, 360 media production ambisonic sound, and they build sort of all kinds of different experiences. These VR systems, they do a number of them and it’s a very practice based, very project based, very hands on class. And they also get a lot of experience in sort of critical theories and histories of XR. And that course is part of our recently introduced graduate certificate program in XR that’s a cross campus initiative. Uh, we just admitted our first students and we’re launching the program in the fall. So that’s very exciting. Um, yeah. And, um, I guess I’m involved in a number of sort of interdisciplinary projects with folks around campus, like with nursing, with engineering, um, with my peers at the school of music, theatre & dance, sort of exploring many facets of, you know, VR and audio and sort of interaction design across, um, different projects. And that’s kind of like a global picture, I guess.

Jeremy Nelson (05:53):

Yeah, no, it’s great. It’s a fascinating journey. That was one of the things that drew me to the position here was just the diversity in faculty and research and the cross-disciplinary, uh, interactions already occurring. So I was like, Oh, there’s so much, so much here to, to work with and to explore that. Yeah, well in this course, like what was the interest? What are the reactions are some of the students, what are some of the things that they’ve done?

Anıl Çamcı (06:22):

Oh, I mean, it, it was, it was great. I mean, I, I saw no like, uh, departmental or unit friction in the introduction of that course, everyone was super stoked on the administrative side of things. And, um, the first year I introduced it, I mean, I got a very sort of diverse, um, body of students, you know, students from our department, performing arts technology, music, students, engineering, students, CS architecture design. So I think that was like one of the best scenarios for that kind of class. And it continued, but it continued to be that way actually. And that sort of fed into the, I guess, the success, um, of the course. Um, I think the students were really happy. I mean, most of them came in with almost no experience in VR. Um, uh, and they came out with like really engaging, compelling sort of apps and thoughts as to where this can go.

Anıl Çamcı (07:21):

And I mean, I guess that’s one of the benefits of being sort of at the core of this thing early on in that one of the things that I really like try to drill into my students is the idea that they can be decision makers in this domain, right? Because if you look at, if you look at something like music theory or film theory, I mean, you can put a dent in those as well with great work, but there is just so much work in those areas that it takes a lot of effort to make change, but we are now figuring out what VR theory is. You know, we’ll look back in 40 years and say, okay, those were the best practices then. We figured these things out and now we have these standards. And now these, like, there are these new art forms coming out of it.

Anıl Çamcı (08:07):

So, you know, my students obviously coming in, they don’t have a lot of experience, so they can be a little like self conscious about the work that they put in that they come up with. But I always try to like emphasize the fact that, you know, the most experienced VR developer in this most recent generation is maybe four years ahead of them. Right? That’s a, in the grand scheme of things, that’s a very short period of time. So they get to be decision makers, they get to define what we will refer to as VR theory in a couple of decades. So that’s a very exciting time for them to be, um, working in this domain. And that’s sort of reflected in my students’ attitude and the work that they create in class.

Jeremy Nelson (08:53):

Yeah, No, I’ve I tried couple of the pieces last fall and I was just blown away. Like I hadn’t even considered like the types of experiences they were creating. Would you mind sharing a couple of those that just really stood out to you?

Anıl Çamcı (09:09):

Yeah, of course. Um, I mean, one example that comes to mind is, is a relatively early on assignment. It’s called immersive sonic environment design. And that’s kind of like, not even mid semester, maybe week six or seven. So like this is like six weeks into them into their first introduction to VR. And, um, they get to work on much more advanced projects later on. They work in groups to build these sort of massive systems for, you know, either musical interaction design or 360 media production. But this project is their last individual project. And I designed it relatively open-ended in that it can end up being like a game type thing, or it might be a VR installation, or it might be just an experience that you navigate through and sort of that’s reflected in the student work as well. They come up with really diverse range of applications in response to that prompt.

Anıl Çamcı (10:07):

And although it’s called immersive Sonic environment design, it’s not a solely like audio experience, right. That their work originates from a sonic immersion concept, this might be something that they encountered in their real lives. This might be something that they’re inspired by. Um, but they start from that sonic immersion idea and it evolves into a full fledged, multimodal, interactive VR, um, system that they design. And the students came back with just like, I mean, it’s, it’s so diverse. Um, the kinds of projects that they come up with and like, some of them designed these, I guess, downtown environments, cityscapes, others design their home environments with their roommates, maybe watching TV and you get to walk around the space and yeah, they’ve some of them designed these intense like traffic situations. Um, one example that comes to mind is, um, recently, um, last semester, one of my students were working on, um, I think like, I think he was designing like a nature environment first and that week.

Anıl Çamcı (11:18):

Um, he saw the film lighthouse. I don’t know if you saw it. Um, I had, I had watched it since, um, the film has this sort of like really intense sound designed to it. That’s kind of a defining quality of that film. And after he saw it, he saw sort of like scrapped his project and thought, okay, I need to like focus on this film. He was so inspired by it. So he basically like within the span of a week, he recreated the Island in, in the, in the lighthouse, like the film. And he basically sort of extracted the essence of what inspired in the sound design and made it into this like interactive thing. And at the time I had not seen the film and just like going through the, um, the landmarks that he picked from the film and sort of like, uh, mashed them into this really interesting sonic experience, um, was just so inspiring.

Anıl Çamcı (12:12):

And other student, um, designed a lodge from twin peaks, like this sort of intense, um, imaginary space and that sort of taps into my childhood because I watched twin peaks as a child. And I was like personally really affected by the lodge and seeing that my student using VR in a really creative way to recreate that was so moving. Um, and I think this is, this is kind of a turning point for me. Um, for the most recent iteration of VR in that, you know, like I said, it’s not even their like big polished project in the class, it’s sort of an early on a project, right. But the ability of a person who just had like six weeks of VR development tutoring, um, to be able to use this platform in such a creative manner, um, to come up with these like sort of really diverse and interesting ideas that would otherwise not be possible with any other platform is just what tells me that, you know, there’s this really bright future to, you know, not only artistic expression in this domain, but for content creators in this domain.

Anıl Çamcı (13:22):

And some of the apps that you might have tried had to do more with like designing these VR instruments, um, you know, we’ve had students designing these sort of gravitational systems that, you know, play with the idea of a polyrhythmicality in music and how like the user can come in and change the architecture of the environment to tamper with the polyrhythmicality in that system. So it’s just super open-ended and I’m really inspired by the extent to which, um, our students are able to sort of exploit that open-endedness right. Like what do I do if there’s no gravity, what do I do if there’s no limit to what the extent of this world could be? What do I do? What if the instrument can be the size of a planet and Yeah.

Jeremy Nelson (14:12):

right, right. Yeah. It was, yeah, it was fascinating to me. And it was just super yeah, like you said, creative and inspiring and just impressive to go from zero to that matter of weeks. Yeah, yeah, yeah. And it wasn’t laid out as like you’re going to learn Unity or you’re going to learn to be a programmer, right. Like no, the tool.

Anıl Çamcı (14:31):

Exactly, exactly. And the ability of them to like, make use of those tools in such creative ways. I think that’s sort of a mark for any emerging media, right? Like music was the killer app for sound reproduction. Cinema was the killer app for film. So I think like that these forms of creative moving forward will be the killer apps for XR in general.

Jeremy Nelson (14:56):

Yeah. Yeah. I hope so. Some of your students are at the center of that. That would be, that’d be awesome. Well, I wanted to talk a little bit about the XR innovation fund. You were one of our awardees and the project we’re working on together. Do you mind talking a little bit more about that and what your goal is with that? We’re very interested in it.

Anıl Çamcı (15:17):

Sure. Um, so, um, it’s a project called Inviso that I’ve been working on quite a while. I mean, um, like the first part of it goes quite a while back and, um, about four years ago we started building a, um, like a browser based desktop version of the tool. Um, it’s a, it’s a immersive sonic environment design tool, right? The user gets to design these three-dimensional audio spaces with sound objects, sound cones, and sound zones. And like all of those can be made dynamic. So these are some sort of staple elements of a virtual sonic environment, and this tool works in the browser. So the goal there was to make it as accessible as possible, and a lot of work into a work, uh, went into figuring out the best interaction paradigms, right. So we made it super complex to begin with. And then we sort of refined it over a long period of time into what it is right now.

Anıl Çamcı (16:19):

And it can be accessed at Inviso.cc, And it’s a tool that’s geared towards, you know, both novice and expert users. That’s kind of a, um, of sort of a defining quality for any creativity support tool. Can someone pick it up and make something useful or meaningful with it? And if, as they advance with that tool, does that still cater to the user as an expert? And that has been a goal in the desktop version that we seek because a lot of spatial audio design tools are catered for experts, right? There are a lot of concepts, uh, parameter spaces that the, you know, the inexperienced user will not have a lot of idea what they are, but with Inviso the goal was to make it so that, you know, kind of like creating a like 3D immersive version of a, like a stereo pan left, right.

Anıl Çamcı (17:12):

But in a, in a virtual reality environment. So make it so easy that anyone can make something interesting with it. And a lot of my students use it in my immersive media class, and now there are like a lot of courses within UM and across the world that begin to use it in a similar fashion, um, just to create these really complex, really rich sonic environments. So it started out with that. And then obviously the, um, the sort of the logical next step is to bring that into a true XR content context. Right. Um, so to bring it from desktop into VR and AR and mixed reality situations. So we’ve been working on porting Inviso, into VR for quite a while now. So in S in essence, we are building a creativity support tool into Unity, which itself is a creativity support tool.

Anıl Çamcı (18:07):

So, um, it basically extends Unity in a way that enables user to design VR within VR, these sonic environments. And that’s kind of an emerging issue in, in XR in general, right. Um, there is like sustainability issues, but there’s the issue of access, um, where, you know, a lot of developers have hard time, you know, keeping up with the changes in hardware that are paired with changes in software. I mean, this happened a lot in my class where, um, Oculus would push a firmware update and you would be locked out of your system until you did that update. So, right, right. You know, one issue there is obviously like there’s a time consideration, but you do that update. And then all of a sudden, maybe the Oculus SDK is out of sync with Unity and now your app is not working. And that creates sort of this really unstable situation for the developers and, um, you know, for, uh, for an independent developer, the pour that kind of time into their work and all of a sudden run into bugs that, you know, they don’t know where they’re coming from, right.

Anıl Çamcı (19:18):

Because these updates are pretty sandbox, right. They’re not super clear as to what has been updated. And they’re are ethical issues as to like, you know, the, the consumer has a right to know, right. What has been changed, but there are the more practical side of things where if we knew exactly what’s been updated, maybe it’s going to be easier for us to dig ourselves out of that bug. So, right, right. That was like one of the main sort of, um, concerns voiced by my students in class that, you know, they would go to the Duderstadt Center to work on their project. And then all of a sudden they need to go through all these updates and their app doesn’t work. And the other issue is, again, going back to this idea of creativity support, the current VR development workflow is pretty clunky. I, I usually like liken it to the punch card days of programming where you exact, where you’ve had this like sort of asynchronous, um, development process, right.

Anıl Çamcı (20:14):

You would program it and then get the results the next day. So, yeah, that’s kind of, what’s going on in VR right now. Right. You work on your computer like you code or your design, and then you go your headset, you put it on, try it out. If there’s a bug, you go back, you code a little bit more and then come back, there’s this back and forth. And, um, we are so used to this sort of real-time action perception, feedback loop in our workflows right now, right when I change something in Photoshop or in a digital order, workstation, I get immediate feedback. So this sort of broken asynchronous workflow is very counterintuitive. And that was another thing that our students voiced as a problem in the current like VR workflow. So we need, um, creativity tools that work within VR. And I mean, our current design paradigm like programming is very text-based right.

Anıl Çamcı (21:06):

And that doesn’t quite work in VR, or hasn’t been quite yet figured out there are like attempts, but maybe we need node based programming platforms. I mean, we’ve had those in audio for a long while, and there are some really interesting applications coming out right now. I don’t know if you’re familiar with this dreams, um, app in PlayStation, it’s essentially a game, um, that is designed to be a game engine and it’s very node based and they just introduced their, um, VR sort of adaptation to it. So we need to figure out these ways to develop in VR so that we have this immediate action perception, feedback loop. We don’t have this sort of disjointed, um, creative process. And that’s a, that’s a very big challenge to tackle. And that’s kind of what we’re trying to do with Inviso, right? How do we offload that design process into VR, into AR so that you don’t need to like design on a 2D paradigm and test it out on 3D? You just get to experience it immediately as you design it. And, you know, there are a lot of sort of evidence to how that improves the work of content creators in the long run. So the project is essentially trying to sort of address that, to bring content creation for immersive sound into XR itself.

Jeremy Nelson (22:32):

Yeah. Well, we’re excited for, to, to use that on some of the other projects we’re working on as well. So yeah. And we’ve been doing quite a bit of work in Unreal Engine, like, so there might be some interesting opportunities in there, which is the way they do their blueprints.

Anıl Çamcı (22:45):

Yeah, yeah, exactly. And they’re doing really interesting work on the audio side of things as well. Like, um, they have like modular synthesizers built into their, um, sort of engine. So there’s a lot of innovation going on there. That’s, that’s really exciting.

Jeremy Nelson (23:01):

It’s, it’s been great where our team’s excited, um, in terms of, you know, potential concerns or advice for the future. I mean, do you, what sort of concerns do you have, um, for this? What do you want to see Michigan do more broadly? I mean, you’re, you’re at the heart of it, so, yeah.

Anıl Çamcı (23:21):

Um, I mean, to be honest, I’m very optimistic about VR and XR in general. Um, having said that, I think there are like a couple of, I guess, themes that are potentially problematic at the moment. And, um, I briefly touched upon these, like one is sustainability and the other is access and they’re kind of intertwined and on the is kind of twofold. There’s the hardware and the software, um, with the hardware side of things. Um, I guess it’s a good problem to have we’re going through these rapid iterations, right? Each year, we’re in the yearly cycle with headsets right now. And that’s, that’s unlike what we have in something like say smartphones, right? They also get yearly cycles, but the, but the bumps are like very iterative, right? Maybe the camera is better. Maybe the screen is improved a little bit. Maybe there’s a new operating system, but with VR, the yearly changes are actually major technological paradigm shifts.

Anıl Çamcı (24:25):

Right. We, uh, we go from, um, I dunno, like outside-in tracking to inside-out tracking that has a lot of implications on how VR is experienced. And then we go from tethered to mobile, to hybrid systems. We get finger tracking. We, you know, we’ll potentially like probably get, um, eye tracking, people tracking next. So these cause like very big changes and it forces the users to update, right? Because otherwise you don’t have access to the full affordance of what VR has to offer at the moment. And it’s not, it’s not a latest and greatest like wanting to latest and greatest kind of issue. It’s actually a valid need to upgrade. And, you know, that’s kind of reflected in the sort of the policies of the companies as well. I mean, Oculus just recently, discontinued support for the Go, which came out what like two, three years ago, I mean, yeah, right.

Anıl Çamcı (25:24):

If Apple, discontinued support for an iPhone from three years ago, there would be mayhem. People would, would be furious. Right. I mean, yeah. I mean, and rightfully so, like people would be really angry, but in, in VR that’s kind of necessary and that’s kind of a good problem, right. Because we get to see this like amazing new potential. Um, but at the same time, if you go to my office now there’s the developer kit, there’s the Go. There’s the original Rift, which is technically tech trash. Right, right, right. Like on an individual basis, maybe that’s not a big issue, but at an institutional level, right. We, we have a kind of an issue with, you know, a, how environmentally with friendly this is and how sustainable it is. So, I mean, we probably need these sort of institutional, um, sort of partnerships with the industry and you and your team has been doing great work along those lines.

Anıl Çamcı (26:20):

So maybe we need like a, I don’t know, like a subscription system where we get maybe 300 headsets every year. I mean, not free, but you know, like it’s a, it’s a sort of a deal between us and them. So that at the end of the year, if there is a major change in technology, we get to put that into a sort of a recycling program and sure we get the new stuff and that sort of ties into, um, like how we can make these tools accessible to faculty across the board. Because for me, I’m teaching a course about XR. So the onus is on me to provide that gear to my students. If there is no facility on campus, luckily we do have that. Right. With the Duderstadt visualization lab. But if we don’t, I need to have that in my class. But for faculty who may, you know, who might use XR, who actually could make use of XR in maybe their ethnomusicology class or their engineering class, if we don’t provide them with the sustainable model, they’ll just not use it.

Jeremy Nelson (27:25):

Right. I had a conversation today with a new faculty that wants to teach a film class and that’s awesome. She’s like, what, what, what equipment do we have? What, what can I use in this hybrid mode? Like, what’s it like, can we ship stuff? How do we clean it? Like, and those are very timely because of COVID. But exactly. And I think, I think that’s going to be more potential to, for distance education.

Anıl Çamcı (27:48):

And I mean, that’s really exciting. And at the same time, um, this sort of like new standards that we’re implementing due to COVID, I mean, even if we like overcome COVID in the next few months, let’s say, um, I don’t think the, sort of the culture that it came with will, will go away immediately. So like I will have to rethink whether I want to cram my students into a lab in, en masse. So, you know, maybe we like check out these headsets to the students for the semester and at the semester they bring them back. We put those through standardization and they get used again and met maybe at the end of this year, if there’s a new headset coming out, we go through the recycling, um, and come up with like a way to figure out, to bring in the new headsets, because I mean, we need to be at the forefront of the research and these major changes in technology kind of forces us to keep up with the technology.

Anıl Çamcı (28:48):

And at the same time companies like Oculus, Microsoft, Valve ,HTC, um, they need us to innovate, right, because we really need to like expand. I mean, I would say that’s the, maybe the only existential crisis that XR would have right now, the access to content creators, we need way more of them. And, you know, we’re, there’s a really good example of that, right? The Apple app store, um, in 2008, when they came out, they made their tools really accessible and that sort of just exploded. Right. And the, indie developers are the ones who made it like really sort of indispensable. So with XR, we really need to tap into that sort of weird indie creativity to make XR indispensable. And the companies sort of need us to innovate in that domain. And I, yeah, I, I’m sure you have more ideas as to what they’re up for in terms of partnerships than I do, but I do hope that, you know, we can figure out sort of like more institutional, more structural ways to partner with these companies. So, so that, you know, A we’re at the forefront and B we don’t create like a bunch of tech trash each year, right?

Jeremy Nelson (29:42):

Yeah. Yeah. That’s exactly the type of stuff we’re thinking about and working through and trying to come up with, you know, platforms and processes to enable more people to generate content, right? Like the projects we’re doing that, they’re great, you know, it’s very time consuming, you know, it’s hard to scale those out. And so we’re, we’re, we’re also looking at, you know, ways to, to create platforms or bring them in for authoring to generate more content that will then drive purchasing decisions for broader headsets. Exactly. If we buy budget headsets and we only have two pieces. Yeah. That’s not a great investment. Yeah. Yeah, exactly. And that’s, that’s really exciting that there is work being done along those lines. Sure. Well, this has been a great conversation. I’m super excited about the work you’ve been doing and you continue to do and our work together. So thank you for your time today and thanks for your work. Yeah, no problem. I look forward to continuing to work with you guys. It’s really exciting. All right. Take care. Alright, you too. Bye.

Jeremy Nelson (31:09):

Thank you for joining us today. Our vision for the XR initiative is to enable education at scale that is hyper contextualized using XR tools and experiences. Please subscribe to our podcast and check out more about our work at ai.umich.edu/xr.