Jeremy Nelson, Director of XR Initiative

In this week’s MiXR Studios podcast, we talk with Alex DaSilva, an associate professor of dentistry, the director of learning health systems, and the director of the H.O.P.E. Lab at the University of Michigan’s School of Dentistry. We discuss Alex’s groundbreaking research in two topics some people find a bit uncomfortable — dentistry and pain.

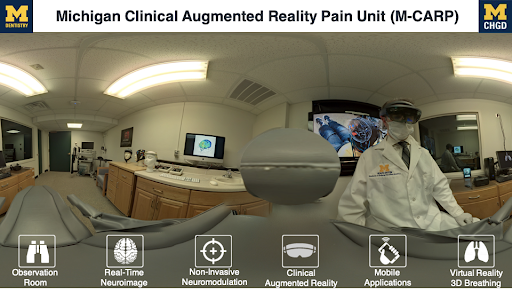

H.O.P.E. Lab (Headache & Orofacial Pain Effort Lab) is exploring how to use Augmented Reality to visualize pain in real time to help with treatment and management in dentistry. In addition to AR, the team is using Virtual Reality to help explore how VR meditation can help manage pain and anxiety for dental emergencies. Alex is working on the cutting edge of pain research and using XR to visualize treatment in fascinating ways.

Alex describes the work he and his team are doing with the Microsoft HoloLens to process real-time data from patients and project a visual representation of the brain onto the patient. This research is allowing the team to more objectively understand where the pain is located within the brain and then devices better treatments and plans for management. Previously, work with 3D visualizations and post processing took minutes or hours and had to be viewed in a closed system. The advancement of the HoloLens technology is opening up opportunities to better understand what patients are describing, and combining the data with artificial intelligence allows practitioners to better catalog the data and make recommendations. Augmented and Mixed Reality offer more engaging interactions between doctors and patients as compared with computers or tablets because you can still look at the patient and stay focused (albeit with a large device on your head).

In addition to Augmented Reality, his team is studying the effects of Virtual Reality to help manage pain and anxiety. They have built a VR visualization of a pair of 3D human lungs that respond to the wearer’s actual breathing. In this experience, the patient can actually see what their lungs look like when they breath more slowly or more quickly. The preliminary findings are that this type of visualization stays with the patient much longer than traditional meditation or guided meditation and the potential impacts on pain management are promising. We discuss how these technologies could be paired with newer telemedicine offerings to bring more support for patients when they are unable to physically travel to a clinic or hospital.

Alex and I also discuss ways XR can impact teaching and learning, and Alex sees many ways that XR can bring more students and healthcare professionals into the treatment process. One is in telemedicine where a student or physician could be wearing a HoloLens and stream in the students to see the patient and better understand the situation. In addition to real-time connections with patients via XR, XR can be a way to help train students on particular pain conditions, or they could explore regions of the brain in VR to better understand what is happening and where pain is registering.

We conclude our conversation about the unique position the University of Michigan is in its ability to bring XR to so many people across campus. The diversity that is core to the University creates opportunities and affordances for innovation and collaboration that can move the industry forward in more impactful ways. There is such a broad range of people, technologies, disciplines, thoughts, and expertise at the University and that is one of the things Alex cherishes about what he and his team have been able to do. The future of understanding pain and treating it is very promising and thanks to the work of Alex and his team.

I enjoyed talking with Alex and learning more about his work and how he thinks XR will impact the future. Please share with us what you would like to learn more about in the XR space at [email protected].

Subscribe on Apple Podcast | Spotify

Transcript: MiXR Studios, Episode 23

Jeremy Nelson (00:10):

Hello, I’m Jeremy Nelson. And today we are talking with Alex de Silva an associate professor of dentistry, the director of learning health systems and the director of the hope lab at the university of Michigan school of dentistry. We are talking about his work in pain detection and pain management and how he uses a HoloLens for real-time pain visualization in the brain of his patients coming up next in our MiXR podcast. Good afternoon, Alex, thank you for joining us today.

Alex DaSilva (00:53):

My pleasure, Jeremy.

Jeremy Nelson (00:55):

Yeah, I’m, I’m looking forward to our discussion today. You know, I’m thinking back on when I visited your lab cheese, was it last in the fall of 2019, I guess, and seeing all the amazing work you’re doing and the experiments and research you’d set up for me to try out. That was, that was really exciting, you know, for our listeners. Would you mind giving a little background of, of your work and how you got into augmented reality and XR technologies with your studies?

Alex DaSilva (01:28):

Yes. Um, I’m a, I’m a dentist, so I’m pretty good at provoking pain, but also is studying pain. And for many years we, uh, we’ve been struggled trying to help our patients, not only acute pain, but also chronic pain. And the way that I try to help, uh, the field is become a neuroscientist too. it’s looking by looking at the brains of patients suffering with pain, either dental, pain, uh, migraine cancer, pain, fibromyalgia, and the using different technologies to do that. And some of those technologies associated with XR.

Jeremy Nelson (02:16):

Nice. That’s exciting. So did you, how did you get started? I know you have a HoloLens that you’re doing work with. How did you come across the HoloLens and how did you begin to explore that? Was it something you’d seen somewhere else or an idea you had video?

Alex DaSilva (02:32):

Well, we, we start using XR first because we were developing mobile technologies, uh, to track pain in patients. And we work, uh, in collaboration with 3D Lab all through the lab, at the university, uh, with Eric Maslowski and the team there. So with that, with that, we also develop, uh, other, uh, collaborations. One of them is one of our research projects. We image the brain of patients during a migraine attack. So all the dysfunctions that occur when the patient is suffering with migraine, uh, we collect that data, but we wanted to navigate through it. We wanted to, uh, uh, give that experience to also immersive experience to our students. So we start to work with the, uh, uh, with, uh, with them to create the experience. We did it, at the cave we have, like this floating brain with the patients, like what happened to the, our own painkillers in the brain.

Alex DaSilva (03:44):

And during the migraine attack, what happens with our dopamine during a migraine attack. And so these were experience, uh, that we had, uh, we could provide, but for one or two, um, students and to bring them step-by-step. So the idea is to use a HoloLens and connect even more with our technology, with our, uh, research, uh, really explore the brain of, uh, of our patients and give this expression and this experience to several researchers, but also students. So it was not a difficult process or a step to jumping to a HoloLens, for example.

Jeremy Nelson (04:31):

Mm Hmm. Yeah. What was so I’m recalling, uh, there was a patient sitting there with a device on their head, and then I could visualize a 3D brain, right. Superimposed over their skull. Can you describe that a little bit more and what you’re trying to study there, or how that was used?

Alex DaSilva (04:53):

Yes, Yes. Something interesting about pain is that it’s subjective. So you can say, you can tell someone that I’m suffering. I have pain in my head. They have pain here and, uh, you have to trust the person because you cannot see that. No. So one of our projects is to use the sensors, uh, with light, uh, what’s called a functional near-infrared spectroscopy, basically the slide that’s across, this call, uh, reach the surface of the brain. And as you have processed to, uh, uh, to perceive the pain or in the neurologic process at a high level, um, that light, you reflect reflect back to the sensor. Uh, eh, there was activation here and there. So that’s the way that we see if someone is having pain or not objective, uh, just straight in the brain. So what we did is to develop, uh, software, uh, with, uh, other collaborators is to, uh, collect that data in real time and send that information to the HoloLens.

Alex DaSilva (06:08):

So it’s called CLARAi, uh, clinical, augmented reality and artificial intelligence, CLARAi so what is that? So we collect the data from the brain of the patient in a real time, and you project that to the HoloLens. And so that is project on a template of a brain that we plot in the patient’s head. So basically you are with the patient, in the dental chair, you go around the patient and you can see in his head, this brain showing all the colors, if it’s an activation, the activation. So you are seeing pain by looking at the process that takes over their brains. So that is, uh, uh, very, uh, wow factor. But yeah, because you are seeing the brain in the patients. Yeah. Uh, doc can, can you see my pain? You can, yes. I can see it, uh, in the actually is affected more your frontal cortex or more your emotion or more your sensory report.

Alex DaSilva (07:20):

And so we are using that, uh, the data for XR that we actually extract from the sensors, from the, uh, neuroimaging device. And now we are processing in real time to define who is in pain or not, and where the pain is because the brain has a mapping of our body, uh, in the whole [unknown] area and with that we can see if there is pain in the right side, in the left side. And we are developing that. But the truth is so not just researchers, but also the clinicians and the students can see pain as they take place in, in, uh, in our patients, just with the immersive experience of XR, right. They’re seeing that and going around in 3D and seeing the other beauty about pain is that is a very, uh, diverse experience. As I mentioned, there is the sensory part. There is the emotional part, the cognition part, your memories and things like that. And the, the more you, uh, uh, see this taking place in areas of the brain, you might decide one type of treatment or the other. So you are really using neuroimaging and, uh, XR to apply that, to help patients with pain and also education, and research for sure.

Jeremy Nelson (08:59):

Yeah. That’s fascinating. So you could start to categorize where common symptoms maybe lighting up or showing, or how are you using what the patient’s describing compared to what you’re seeing? So that’s the decisions. Yeah.

Alex DaSilva (09:14):

Yeah. That’s a good question. Because, uh, as a, as an, as we discussed the, if you are expecting the pain, you actually, uh, you suffer more because you are, uh, focusing much more in the pain and these, uh, expectations or emotions associated with pain, even before the modulate, your, your experience. So in, they take place in different areas in the brain, and this is really fast process. So, uh, if you start to dissect that for each type of pain experience or pain disorder, for example, migraine, or for example, fibromyalgia, so in, or even emergency. So in the future, you can help with the patient, even when they cannot express the suffering that they are having. One project that we, uh, uh, is extension of this one is that we, uh, uh, we getting the IRB approval, is that getting patients during a dental emergency so that we collect the data.

Alex DaSilva (10:28):

We, uh, uh, as they are like in pain, and with that, we, uh, uh, can see, uh, how that takes place. Let’s say that are more anxious than others before they sit in the dental chair, or should we, uh, uh, wait a moment, uh, address first anxiety. And then we, uh, we deal with the pain and, uh, would that information, would it help the after the treatment? So these are the things that, as I mentioned, that we start to dissect B uh, the, the pain experience is experienced in humans, but in a very objective way, but again, as they take place and objectively, by looking in the, uh, uh, direct in the, uh, in, in the brain of those patients,

Jeremy Nelson (11:26):

That’s fascinating. Yeah, I remember it was, it was very interesting and like unexpected when I showed up, I didn’t, I didn’t quite know what to expect. And that was, I was blown away at some level. Cause I was imagining perhaps like, you know, you have to put the patient in a large MRI machine and take a scan and then all that time to process and bring it back. But I mean, you put this cap on somebody and we were seeing it within, within minutes, right? With the software you built.

Alex DaSilva (11:51):

Yes, and this is the development of things, XR in the past, it could be in a, in a closed room and you have this projections and, uh, it fascinating, but how, what about like when make them portable? And that’s the way that we are now with all these devices for virtual reality, augmented reality, even now glass. So now think, and to have the ability to have collected data and project in real time, because the very beginning of XR, uh, was just, uh, okay, you have the data, you project that it’s not a continuum. It’s not a real time. And now we are starting to do that and take advantage of the data that we’re seeing, but processing that data at the same time, that’s why the next step or the step that is, uh, XR is moving, is adding that to, you know, in addition to the sensors, the artificial intelligence that can give us the closed loop.

Alex DaSilva (12:58):

The information for us to take the decision is not only learn, but also being active to take decisions, to help patients, help students and with information. And that’s why it’s also connected with learning health systems. Uh, the XR in this part is really to give the immersive experience because when you’re doing things like that in real time, you, uh, you cannot just look to the screen sometimes on the right or the left. Uh, let me, uh, let me tape like let me tape, let me type here and, uh, and figure out what it is. I remember at the Massachusetts General Hospital, Harvard, when we were first beginning with computers and, uh, pain treatment, it was disturbing for the patient because instead of looking and talking with the patient, the doctors were actually typing on the computer and completely disrupted this patient doctor interaction. So with XR, we, we, you are facilitating this process because you’re looking at the patient and at the same time, having augmented reality experience, augmented information and the, with the connection and the ability to, to help them,

Jeremy Nelson (14:30):

Right. And you’re hands free. And so you can continue to do what you need to do. And now with the new HoloLens 2, you can flip the, the display app and, you know, they can see your whole face. And so there’s, there’s some interesting affordances you’ll have, uh, as you move into the new devices.

Alex DaSilva (14:47):

That’s right. That’s right.

Jeremy Nelson (14:53):

Well, yeah, I remember there was, there was another, Um, there was a VR experience, right. That, that I tried out with with the breathing. Can you talk about, I forgot about that. I just remember it.

Alex DaSilva (15:03):

Oh, Jeremy, that, that actually is something that we’re very excited now because we, I cannot tell exactly the results for now because we are submitting, but I can tell you the, explain the technology, what we did is what called virtual reality breathing, we with sensors, we figured out the patient breath in breath out, the entire briefing cycle. And, uh, they could, with the virtual reality, see 3D lungs that are synchronized with their breathing, with that we a great immersive experience of mindful breathing, which is used for, uh, relaxation, uh, pain relief. And we compared the easiest thing that I, uh, research we compared patients do, uh, help subjects during painful experience, uh, with, uh, only mindful breathings. So the subjective idea that the breath in breath out you, don’t, you don’t see the image of the lungs compared to the group where they see those lungs. That’s something that you see, uh, your lungs, uh, 3D, uh, give it that immersive.

Alex DaSilva (16:28):

And it’s like printed in your brain. You can not forget that any longer because every time when you, you, when you try to do it again, you’re going to see those very, uh, engaging 3D lungs in your mind. Uh, and, uh, synchronized with you. The interesting thing I cannot, uh, um, describing much, but both techniques decrease or increase your pain threshold, meaning you could a few pain, uh, only few pain at higher level of temperature. We apply heat in the patient’s skin and they could, uh, they will need they’re, uh, they are less sensitive to pain, but both, both groups, however they did in a completely brain mechanism, completely different. The XR experience use a different pathway to decrease pain. And that is fantastic.

Jeremy Nelson (17:35):

Right? Well, I’m just thinking, as you’re describing this, it’s, it’s coming back to me. I mean, this was what, 10 months ago, nine months ago that we met, I can picture it still. Like I did it one for like three or four minutes, right? Yeah. And I still have, I still imprint.

Alex DaSilva (17:52):

It is. It is. And that’s sometimes what’s complementary medicine has a issue, uh, especially these abstract ways. Uh, the breathing is, is, uh, very helpful for relaxation, very helpful for, uh, pain relief. But at the same time, there are, uh, patients that have a problem to visualize or to get into the mood of breathing or a meditation. They need a kind of, uh, of a support to get into the mood. So the XR experience that is synchronized the way for them that is immersive really help, uh, uh, helps them to get into the, uh, the, uh, breathing, the mindful briefing in a different way for the brain, but also decrease pain.

Jeremy Nelson (18:55):

Yeah. No, I’ve, I’ve, I’ve tried meditation, I’ve tried different apps and things like that. And it’s a struggle, right. I mean, I know people, uh, spend their whole lives, trying to perfect that and, and get to those levels of consciousness and in certain studies. So yeah, I could, but I remember being in the headset, like I was there, I mean, I was cut off from everything else. Right. And I had something to focus on and, you know, I’m an engineer. So like I liked having something that copy or target my energy on. So

Alex DaSilva (19:25):

Yeah. As I said, yeah, you have to support for many, for many patients, you have to see to believe they need the visual experience, or even the auditory experience to believe, uh, for the sake of engage with a particular technique or approach for pain relief. And that’s what is exciting. But what I, uh, what I was interested in is that the process in the brain was, was, was completely different. They were completely different. However, they all achieve the goal. They both achieved the goal of decreasing pain or pain sensitivity. .

Jeremy Nelson (20:08):

Sure. That’s uh, that’s great. Well, well, are you, there was another experiment. I think we tried. So what other, what other work are you doing inn XR? You get the HoloLens, you got the VR breathing. Was that, was there another one? I remember there was a, it was like two patients communicating.

Alex DaSilva (20:27):

Oh. So, so we, uh, uh, I mean, as I said to you, as I was excited about it, but we, uh, we are, uh, developing projects where we can, uh, connect the two brains and then we, uh, and see how we engage. So we are talking here, uh, but imagine if you were in front of each other and we are talking and see each other, but how that process takes place, uh, would I be able to modulate your brain and vice versa? So, uh, what are the pathways in the brain where two people connecting, think about a patient and a doctor that to better understand our, uh, connection, which is sacred. I mean, patients and doc, uh, in doctor, how can improve that and use objective measures from the brain. So, uh, we, uh, uh, already did some tests, uh, how we, in the real time, we are observing how patients, how, uh, um, two humans and, uh, in the role of patient and, uh, and doctor, uh, communicate. And, uh, so then you can dissect about trust and dissect about, uh, diversity, the role of diverse expectations. So these are a really long process, but I I’m dissecting started now of one brain. I’m the sect and now two brains that are connecting

Jeremy Nelson (22:12):

That’s right. I remember now, yeah, that’s very interesting work, very exciting cutting edge research. You’re doing, you know, what, what do you see, um, from the, you know, do you have any concerns about the technology and the space, you know, maybe in teaching and learning or research, you know, you’ve been at the cutting edge bleeding edge, may perhaps of some of this. Do you have concerns about the future? Or areas we should be mindful of?

Alex DaSilva (22:42):

We are in a very difficult, uh, period, uh, for society at the same time. Uh, we are open up, uh, for technologies. We are talking about education through, uh, uh, telemedicine, for example. And so people are more open to that. And even government is, uh, uh, supporting that. Uh, I believe that that, uh, uh, tele-medicine should be, uh, an area and where XR, you really help us, uh, patients like this connection with the doctor being remote, uh, is very, uh, uh, very, um, it’s, it’s very helpful to be able to connect with the doctor via the screen, but sometimes you, you lose the human experience, immersive experience of being in a room with the doctor. The other thing, too, is that at the same time, that to talk with the patient, that we can also collect data the same time. So XR, will help in the future telemedicine where you have this more immersive connection between patient and doctor, even though they are in completely different spaces.

Alex DaSilva (24:16):

So in, at the same time, they have to collect the data in a way that we can help, not only the patient that they’re seeing, but several. The other thing that we, uh, we are not paying attention is that telemedicine and the in, even in a virtual environment can help also education because we can integrate students to that environment. And also learn with that process for now, the students are much more aside. You have only the doctor and the patient with a screen, and that’s it. And the XR can help, uh, this integration of, uh, other students for that process, too. So if you are talking about, um, different ways to treat patients and located, uh, medical, dental, uh, healthcare professionals, XR can help create that environment in the future and give a much more immersive experience, not only for the doctor, but all the, uh, the patient and also students.

Jeremy Nelson (25:32):

I love it. That’s great. Yeah. We’ve been having some conversations with the school of nursing and the medical school about how do we use the HoloLens or use some of these devices to continue to teach the next generation of healthcare professionals. So,

Alex DaSilva (25:48):

Yup. Yup. Yup. And it’s the right time because we need, uh, give options to our patients. We need to give options, um, to all doctors and students, XR can improve that dynamic.

Jeremy Nelson (26:15):

Yeah. I, I agree. Let’s make it happen. Well, I mean, you’re already setting the stage a bit for this, but what do you want to see Michigan do, uh, with XR? You know, um, you just kind of alluded to that, the possibilities, but anything specific, anything tangible you want to see us do with XR as we continue to expand out our XR initiative, or just broadly across the university,

Alex DaSilva (26:41):

You know, the, there is something, um, very important about the University of Michigan is the diversity that we have. The innovation comes from diversity. It comes from diversity of people, diverse backgrounds, diversity of technologists perspectives. It is. And we have that, uh, treasure here, uh, at the university of Michigan, uh, the more we create initiatives to integrate that diversity of ideas, even for the use of XR, I think more innovative, we are initiatives like your Pod, podcast, but, uh, different, uh, schools working together or, uh, would supplementary teams, for example, a bioengineer, uh, you have a mindset that is amazing for solving problems related to devices or technologies. A clinician would have the mindset of applying, uh, something that they can help with the patient. But then if you look into a [unknown] is how to use that information, create artificial intelligence, to recognize things and create the loop to take better decisions.

Alex DaSilva (28:10):

So you have, we have already, amazing initiatives, diverse initiatives across the university, and the more we create initiatives, that integrate them and have the patient patients to integrate them because it’s a long process. Everyone has a different language. If you talk with a bioengineer, [inaudible] they talk over our heads talking about, uh, AI, person, uh, a little health system person, it’s like, what are you talking about? And, uh, and if you, you feel defined that a particular application that will be relevant and significant to a one problem. One group also site. So impatient to make them understand themselves, they will create something, but it comes from diversity. If you only have these initiatives isolated in silos, uh, by groups, they always have the same mindset. So I always, I, I keep saying that I always kind of the stupid person in the room because I’m always trying to challenge myself with people, uh, from different areas. So feeling comfortable with different languages, being comfortable with different perspectives, really help with innovation and XR is amazing place because you are creating environments where all these, uh, groups can create more ideas and more solutions.

Jeremy Nelson (30:01):

That’s great. It’s I think that was one of the reasons that drew me to the initiative and the position and just the diverse set of, uh, ideas, people working in this space, folks like yourself, like there was just, there was a lot to work with here. And I think we’re already starting to see some of that come together. Well, this has just been a great conversation. I’ve really enjoyed learning more about how you got started, how you’re thinking about this, where you’re taking it, and just really appreciate the time today. Thank you so much.

Alex DaSilva (30:31):

Thank very much. Jeremy congratulations again for your initiative. As I really talk about diversity. I remember the last meeting we were together. There are people from every, every, uh, school at the University. And that is that for me, it was a good hint that, you know, the, the group we are going in the right direction.

Jeremy Nelson (30:55):

I agree. Well, thank you so much. Have a great day,

Alex DaSilva (30:58):

have a great day.

Alex DaSilva (31:12):

Thank you for joining us today. Our vision for the XR initiative is to enable education at scale that is hyper contextualized using XR tools and experiences. Please subscribe to our podcast and check out more about our [email protected] slash XR.